Insights

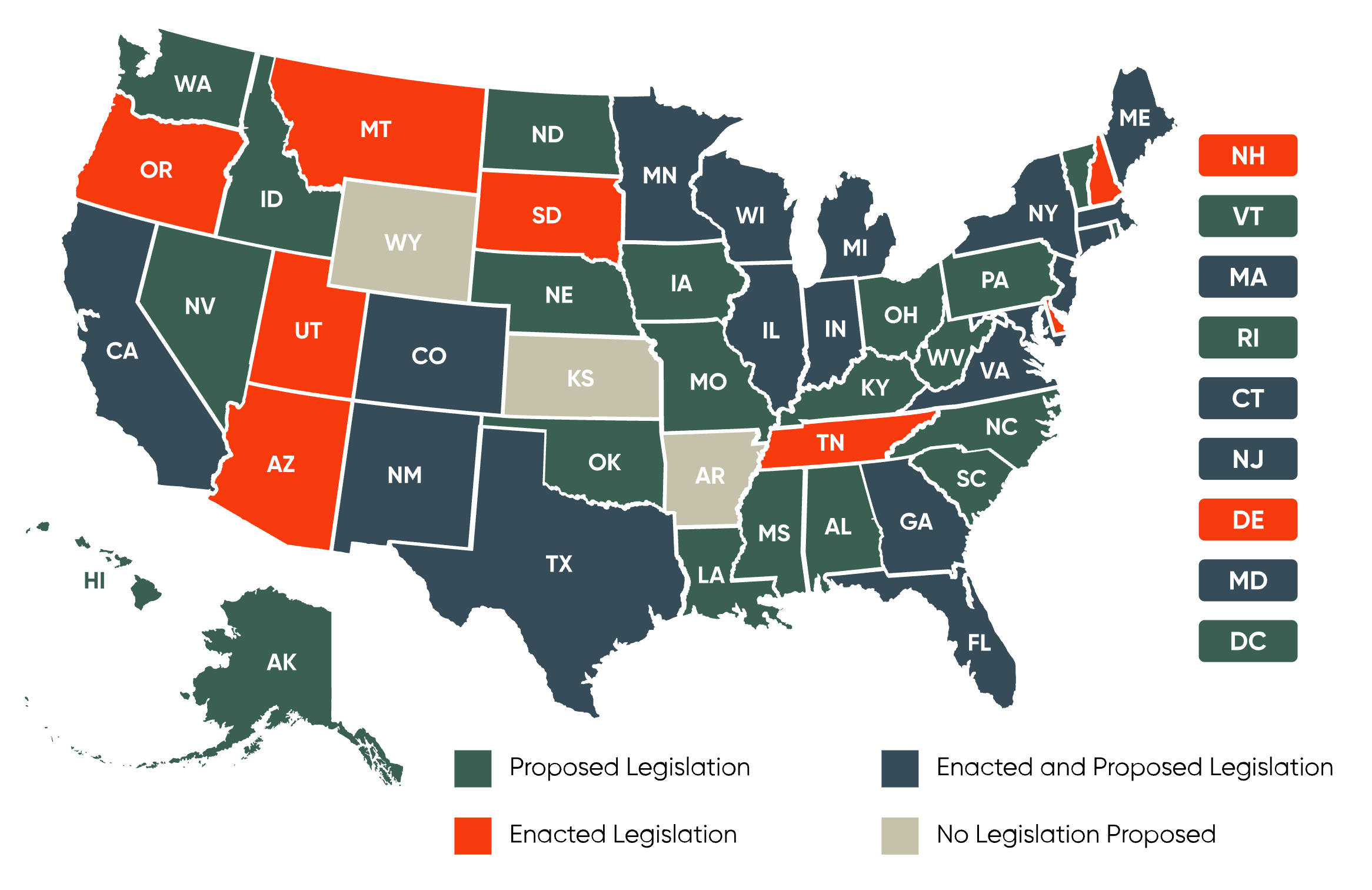

US state-by-state AI legislation snapshot

Summary

Artificial Intelligence (AI), once limited to the pages of science fiction novels, has now been adopted by more than 40% of enterprise-scale businesses in the United States, and as many organizations are working to embed AI into current applications and processes.[1] As companies increasingly integrate AI in their products, services, processes, and decision-making, they need to do so in ways that comply with the different state laws that have been passed and proposed to regulate the use of AI.

Click the map below to view detailed state-by-state AI legislation information.

Or monitor AI regulation in-depth with our new State-by-State AI legislation table.

As is the case with most new technologies, the establishment of regulatory and compliance frameworks has lagged behind AI’s rise. This is set to change, however, as AI has caught the attention of federal and state regulators and oversight of AI is ramping up.

In the absence of comprehensive federal legislation on AI, there is now a growing patchwork of various current and proposed AI regulatory frameworks at the state and local level. Even with the federal bill uncertain, it is clear that momentum for AI regulation is at an all-time high. Consequently, companies stepping into the AI stream, face an uncertain regulatory environment that must be closely monitored and evaluated to understand its impact on risk and the commercial potential of proposed use cases.

To help companies achieve their business goals while minimizing regulatory risk, BCLP actively tracks the proposed and enacted AI regulatory bills from across the Unites States to enable our clients to stay informed in this rapidly-changing regulatory landscape. The interactive map is updated regularly to include legislation that if passed would directly impact a business’s development or deployment of AI solutions.[2] Click the states to learn more.

We have also created an AI regulation tracker for the UK and EU to keep you informed in this rapidly changing regulatory landscape.

[1] IBM Global AI Adoption Index 2024.

[2] We have also included laws addressing automated decision-making, because AI and automation are increasingly integrated, noting that not all automated decision-making systems involve AI, such businesses will need to understand how their particular systems are designed. We have omitted biometric data, facial recognition, and sector-specific administrative laws.

Related Capabilities

-

Data Privacy & Security

Meet The Team

Partner; Chair – Global Data Privacy and Security Practice; and Global Practice Group Leader – Technology, Commercial & Data, Boulder